新版本

Showing

AAAI控制实验图/2-state.pdf

0 → 100644

File added

AAAI控制实验图/7-state.pdf

0 → 100644

File added

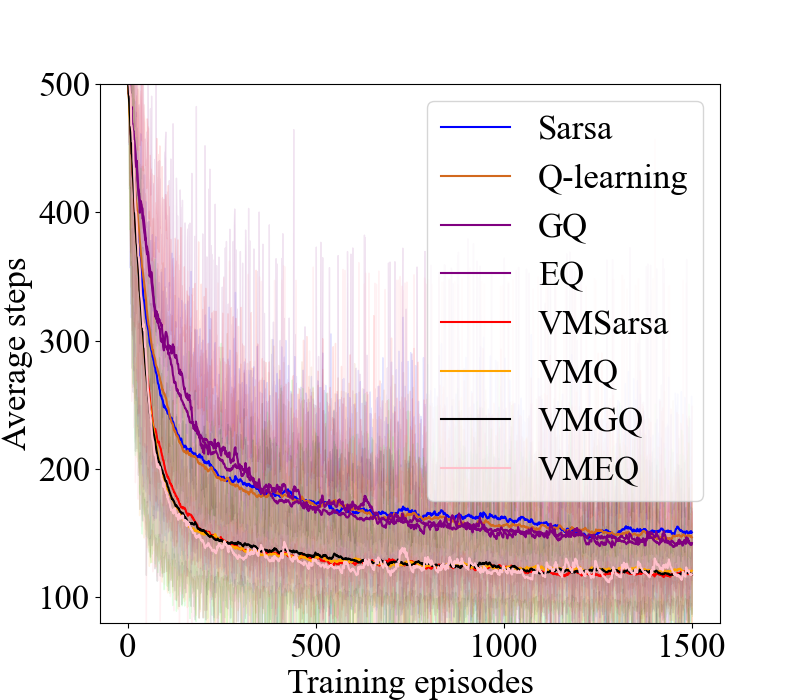

AAAI控制实验图/acrobot.pdf

0 → 100644

File added

AAAI控制实验图/acrobot.png

0 → 100644

257 KB

AAAI控制实验图/acrobot.svg

0 → 100644

This source diff could not be displayed because it is too large.

You can

view the blob

instead.

AAAI控制实验图/cl.pdf

0 → 100644

File added

AAAI控制实验图/cliff_walking.svg

0 → 100644

This source diff could not be displayed because it is too large.

You can

view the blob

instead.

AAAI控制实验图/maze.pdf

0 → 100644

File added

AAAI控制实验图/maze.svg

0 → 100644

This source diff could not be displayed because it is too large.

You can

view the blob

instead.

AAAI控制实验图/mt.pdf

0 → 100644

File added

AAAI控制实验图/mt.svg

0 → 100644

This source diff could not be displayed because it is too large.

You can

view the blob

instead.

No preview for this file type

No preview for this file type

No preview for this file type

No preview for this file type

This diff is collapsed.

Click to expand it.

Apendix/pic/BairdExample.tex

0 → 100644

Apendix/pic/maze_13_13.pdf

0 → 100644

File added

NEW_aaai/aaai25.bib

0 → 100644

This diff is collapsed.

Click to expand it.

NEW_aaai/aaai25.bst

0 → 100644

This diff is collapsed.

Click to expand it.

NEW_aaai/aaai25.sty

0 → 100644

This diff is collapsed.

Click to expand it.

NEW_aaai/anonymous-submission-latex-2025.aux

0 → 100644

NEW_aaai/anonymous-submission-latex-2025.bbl

0 → 100644

NEW_aaai/anonymous-submission-latex-2025.blg

0 → 100644

NEW_aaai/anonymous-submission-latex-2025.log

0 → 100644

This diff is collapsed.

Click to expand it.

NEW_aaai/anonymous-submission-latex-2025.pdf

0 → 100644

File added

File added

NEW_aaai/anonymous-submission-latex-2025.tex

0 → 100644

NEW_aaai/figure1.pdf

0 → 100644

File added

NEW_aaai/figure2.pdf

0 → 100644

File added

NEW_aaai/main/conclusion.tex

0 → 100644

NEW_aaai/main/experiment.tex

0 → 100644

NEW_aaai/main/introduction.tex

0 → 100644

NEW_aaai/main/motivation.tex

0 → 100644

This diff is collapsed.

Click to expand it.

NEW_aaai/main/pic/2-state.pdf

0 → 100644

File added

NEW_aaai/main/pic/2StateExample.pdf

0 → 100644

File added

NEW_aaai/main/pic/7-state.pdf

0 → 100644

File added

NEW_aaai/main/pic/Acrobot_complete.pdf

0 → 100644

File added

NEW_aaai/main/pic/BairdExample.tex

0 → 100644

NEW_aaai/main/pic/acrobot.pdf

0 → 100644

File added

NEW_aaai/main/pic/cl.pdf

0 → 100644

File added

File added

NEW_aaai/main/pic/cw_complete.pdf

0 → 100644

File added

NEW_aaai/main/pic/dependent_new.pdf

0 → 100644

File added

NEW_aaai/main/pic/inverted_new.pdf

0 → 100644

File added

NEW_aaai/main/pic/maze.pdf

0 → 100644

File added

NEW_aaai/main/pic/maze_13_13.pdf

0 → 100644

File added

NEW_aaai/main/pic/maze_complete.pdf

0 → 100644

File added

NEW_aaai/main/pic/mt.pdf

0 → 100644

File added

NEW_aaai/main/pic/mt_complete.pdf

0 → 100644

File added

NEW_aaai/main/pic/randomwalk.tex

0 → 100644

NEW_aaai/main/pic/run_baird.svg

0 → 100644

This source diff could not be displayed because it is too large.

You can

view the blob

instead.

NEW_aaai/main/pic/tabular_new.pdf

0 → 100644

File added

NEW_aaai/main/pic/two_state.svg

0 → 100644

This source diff could not be displayed because it is too large.

You can

view the blob

instead.

NEW_aaai/main/preliminaries.tex

0 → 100644

This diff is collapsed.

Click to expand it.

NEW_aaai/main/relatedwork.tex

0 → 100644

NEW_aaai/main/theory.tex

0 → 100644

This diff is collapsed.

Click to expand it.

论文草稿.txt

0 → 100644