新版

Showing

No preview for this file type

No preview for this file type

No preview for this file type

No preview for this file type

No preview for this file type

No preview for this file type

This diff is collapsed.

Click to expand it.

This diff is collapsed.

Click to expand it.

No preview for this file type

No preview for this file type

This diff is collapsed.

Click to expand it.

NEW_aaai/main/pic/2-state-offpolicy.pdf

0 → 100644

File added

NEW_aaai/main/pic/2-state-onpolicy.pdf

0 → 100644

File added

No preview for this file type

NEW_aaai/main/pic/BairdExample copy 2.tex

0 → 100644

NEW_aaai/main/pic/BairdExample copy.tex

0 → 100644

No preview for this file type

No preview for this file type

No preview for this file type

No preview for this file type

This diff is collapsed.

Click to expand it.

This diff is collapsed.

Click to expand it.

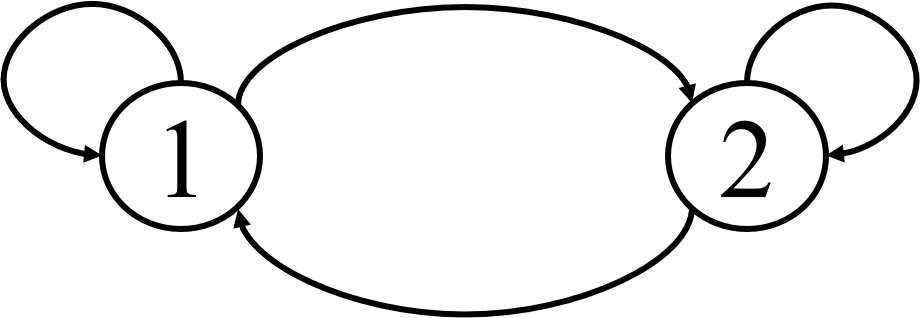

环境图片/2-state.png

0 → 100644

11.4 KB

画图.pptx

0 → 100644

File added

评估实验图/2-state-offpolicy.pdf

0 → 100644

File added

评估实验图/2-state-onpolicy.pdf

0 → 100644

File added